Semantic Search:

Transforming the Search Experience for the Modern Users

Mon Jul 24 2023

Introduction

Search strategies are designed to improve the precision and relevancy of search results based on user queries. In this context, we will explore evolution of searches, starting with traditional keyword-based searches and then delving into a more advanced approaches. This advanced approaches involve understanding the context and meaning of the user's query or phrase, allowing for a more intuitive search experience. Let's embark on an individual assessment of these search strategies, particularly focusing on our unique use case.

Normal or Keyword Text Search: In the Keyword Text Search strategies, the focus was on exact term or key word matching. Users would input a search query, and the system would retrieve videos that contained the exact term in their metadata or descriptions. For example, if a user searched for "cat videos" the system would find videos with the exact term "cat videos" in their metadata or descriptions.

Wildcard Search : As search technology advanced, the new strategy named wildcard search comes into picture. These strategies expanded the search by considering variations or partial matches of the search query. For instance, if a user searched for "cat videos" using a wildcard search, the system could retrieve videos with terms like "angry cat videos" or "funny cat videos" that closely resembled the query.

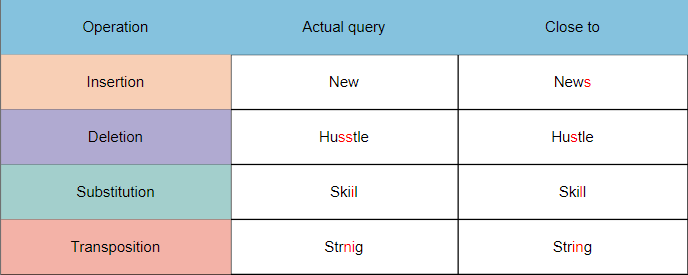

Fuzzy Search : Unlike exact matches, which require the query and the target to be identical, fuzzy search allows for some degree of similarity or variation in the results. This is particularly useful when dealing with typos, misspellings, or slight variations in data. One popular algorithm for fuzzy string matching is the Levenshtein distance, also known as the edit distance. It measures the minimum number of single-character edits (insertions, deletions, or substitutions) required to transform one string into another. The smaller the Levenshtein distance between two strings, the more similar they are.

Semantic Search : This era heralded the advent of semantic search, a strategy built to decipher the context and meaning behind a query, rather than just mechanically matching keywords. For instance, when a user keys in 'entertaining cat videos,' the semantic search system comprehends and serves up videos that encapsulate fun and feature cats, even if those specific keywords are absent in the video's description or title. It could yield results like ' Laughing at hilarious feline antics' or 'Playful kittens create joyous chaos', recognizing the user's desire for amusing cat content. Thus, semantic search outperforms conventional text or fuzzy/wildcard searches by interpreting user intent and providing more contextually relevant video suggestions.

Now the stage is all set for our exploration into a transformative force reshaping the modern user's search experience. We solved the problem of suggesting videos whose process involves getting similar videos from a database filled with metadata, descriptions, and thumbnails of various videos, with the aim of offering highly relevant and contextually accurate search results. This groundbreaking technique, referred to as semantic search, has the potential to revolutionize the way users interact with information retrieval systems.

Building Blocks

We assume that need not have any idea of internals of semantic search , so let's get started with building blocks that powers the system.

Embeddings:

Numerical representation(vector to be precise) of any content (words, picture, sound, etc). In other words, this would be a point on an n-dimensional space.

(ML)Models:

In this context, you can consider models as black-box which takes a word and return you an embedding. If you consider the Model is placing each content on a n-dimensional space, the best model would place the similar content closer/along the same lines.

Vector DB: Database to store the embeddings. This has capability to query embeddings using varying similarity techniques. For our use case, we have gone with elasticsearch vector DB.

KNN Search:

K-Nearest Neighbour Search, search technique supported by Vector DB(ES in this case). Where the k number

of nearest neighbours will

be returned as part of the search. Where the definition of near is using the chosen similarity technique.

L2 similarity:

Similarity calculated based on the distance between 2 vectors. This is basic similarity and not much useful in sophisticated cases.

Cosine similarity:

Similarity based on the the angle between given vectors. i.e., if 2 points are placed on the same line from the origin but placed further apart, those 2 are considered same.

Harnessing Text Embeddings for Semantic Search

Having seen the definition of embedding, there are several methods to generate embeddings, but some of the most common approaches are using neural network-based language models like Word2Vec, GloVe, and BERT. Text embedding algorithms process large amounts of text data and learn word representations that capture semantic and syntactic relationships. Internally, texts are tokenized into words, and each word is mapped to its corresponding embedding vector. The embeddings of individual words in a text can be combined using averaging, concatenation, or other aggregation methods to obtain a fixed-size representation of the entire text.

In our use case we have used Sentence transformers library involved in modifying sentences to express the same meaning

in different ways. They can manipulate the sentence structure, word order, using synonyms, or altering verb

tenses without changing the essence of the original message. This is built on top of the Hugging Face

Transformers library, which includes a wide range of transformer-based NLP models, including BERT, RoBERTa, GPT, and

more. SentenceTransformer utilizes these transformer models to create powerful sentence embeddings for different use

cases.

For the use case , we were leveraging the text content (Title , description and other info) associated with the videos are given to the Sentence TransFormers to create the embeddings and are captured in the Vector db. The dimensions of the vector vary depending on the specific pre-trained model from the SentenceTransformer library . For this particular use case, we have opted for the 'all-MiniLM-L6-v2,' which is a BERT-based model with a dimensionality of 384.

model=SentenceTransformer('all-MiniLM-L6-v2') model.encode(content) # generate embedding for the content

The content before given to a model can optionally be handled by langchain to diving the content by chuck_size

if its too big or split it in logical way by Natural Language Processing. Embeddings will be created for each

split content and stored as different entry in the same index. This factor will also influence the nearest match

for the text embeddings in semantic search with respect to query.We have use langchain text_splitter named

RecursiveCharacterTextSplitter to split the content above chuck size of 2000 characters. This may vary according to

every use case.

Harnessing Thumbnail Image Embeddings for Semantic Search

Now that we have leveraged the text content associated with the videos, we can have the search more meaningful by leveraging the thumbnail image associated with the videos.

Semantic search with video thumbnails is an approach that leverages visual content to enhance the search process and improve the relevance of search results.Video thumbnails are small preview images that represent the content of a video. They provide a visual summary or snapshot of the video's key elements. We can extract valuable information of the image and capturing it in the form of embeddings with the help neural network models.

Each model will have different dimensions and they play significant role in finding the similarities between the objects captured. Here we have gone with 'Clip model' developed by OpenAI with 768 dimensions for image embedding.

model_name = "openai/clip-vit-large-patch14" processor = AutoProcessor.from_pretrained(model_name) model_image_classify = AutoModelForZeroShotImageClassification.from_pretrained(model_name)

The image fed into the model to generate embeddings. These embeddings are obtained by applying a deep learning model to the processed image. The function uses PyTorch to perform the computations and converts the resulting image features into a NumPy array. Finally, the image features are returned as the output of the function, providing a numerical representation of the image's content. They are represented as a high-dimensional vector and will be captured in db along with other embeddings and content.

inputs = import_local_model.processor(images=image, return_tensors="pt", padding=True) with torch.no_grad(): image_features = import_local_model.model_image_classify.get_image_features(**inputs)[0] # generate embedding for the image image_embedding = image_features.detach().cpu().numpy()

Connecting The Building Blocks

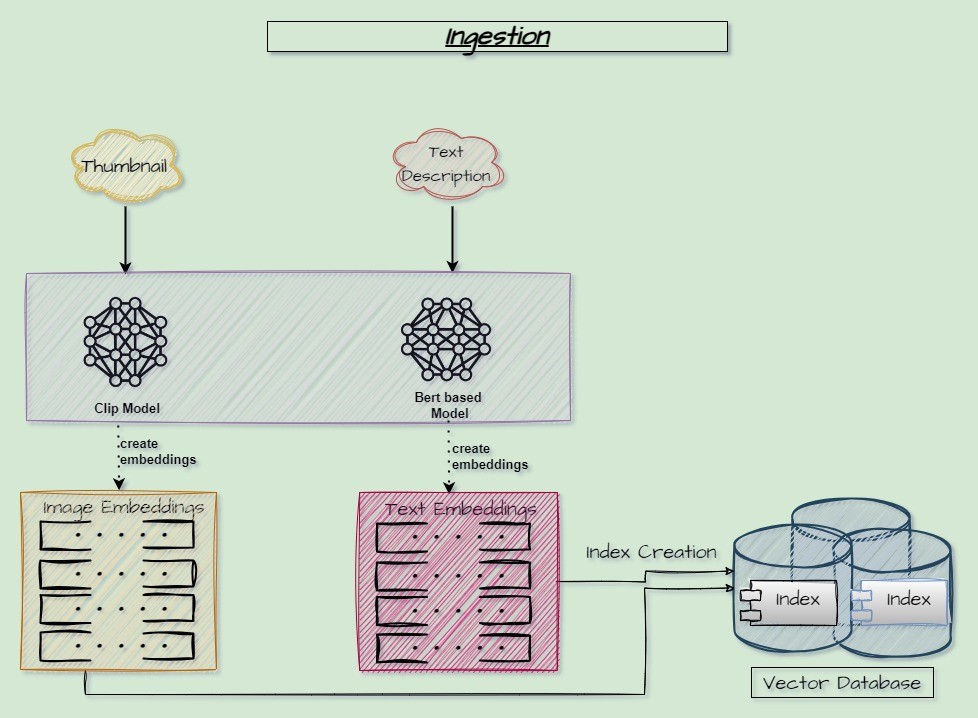

So far we have seen how to generate embeddings for information and thumbnail images of different videos with the help of models.

Those generated embeddings with different dimensions will be saved or index in vector db. This is what we call as Data Ingestion.

Before ingestion, we need to arrive at the similiarity strategy and create the Index appropriately, which we will be taking deeper look in Implementation of Semantic Search part down this blog.

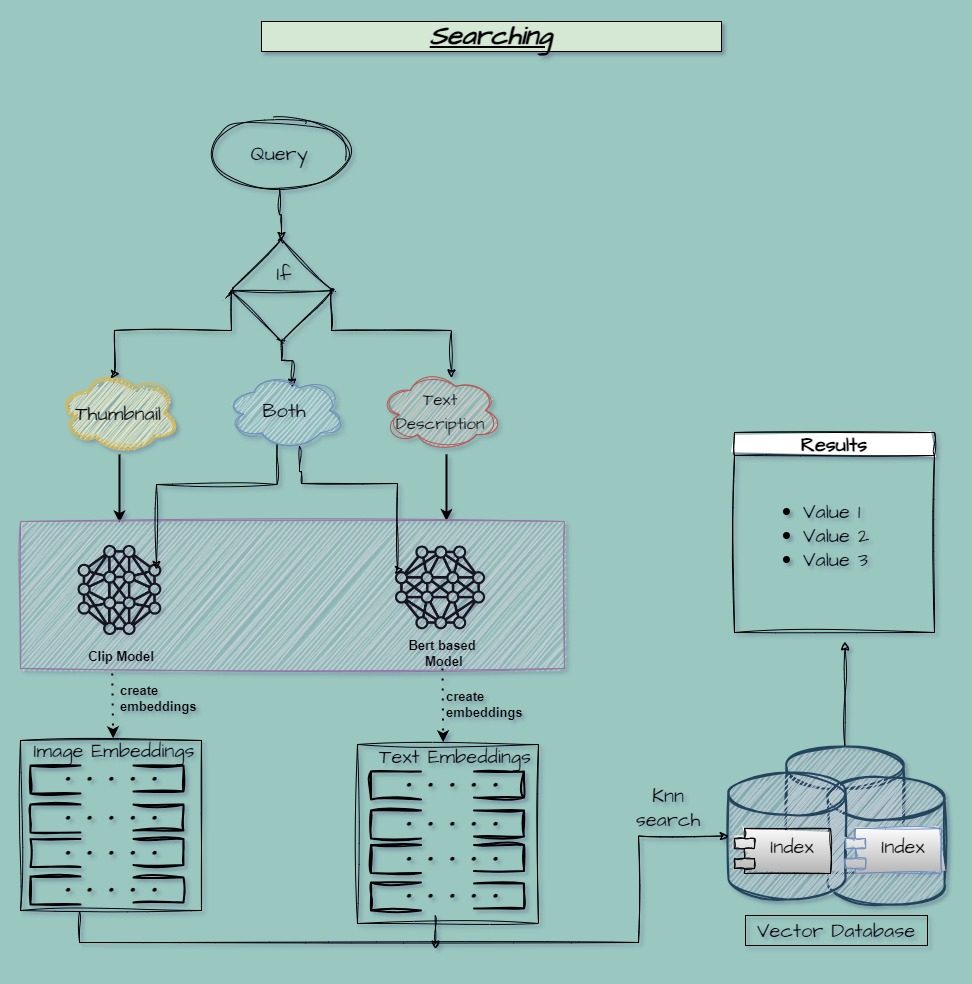

For the final utility, searching, the query provided by user will also be converted into embeddings by same process using same models. Once the embeddings are generated by Deep learning models, their role concludes. Subsequently, Vector Databases take over to apply the specified search similarity and identify the closest matching content, which is then ranked or scored. The scores assigned are inversely proportional to the distance similarity(by whichever similarity approach you chose) between the feature vectors.

We will examine what is similarities and what it contributes in implementation of semantic search.

Impact of Cosine Similarity On Video Semantics

Now that we have leveraged the text and thumbnail image associated with the videos making the search more meaningful, we can leverage on how similarity techniques helps in fulfilling the process of semantic search via vector DBs.

Similarity in vector databases refers to how closely vectors (numerical representations of data) match each other in a high-dimensional space. Higher similarity indicates greater resemblance between vectors, often used for tasks like information retrieval and recommendation systems. There are many similarity techniques like Euclidean Distance, Dot Product or Hamming Distance and more.

Euclidean Distance calculates the straight-line distance between the feature vectors of given and expected query

in a high-dimensional space. Smaller distances indicate higher similarity. Whereas Cosine similarity measures

the cosine of the angle between two embedding vectors. It considers the orientation of the vectors

rather than their magnitude, making it suitable for comparing high-dimensional feature representations.

In content-based retrieval for videos using video thumbnails or by video metadata, various similarity techniques can be applied to measure the similarity between query thumbnails and video thumbnails or query text and video info like titles and descriptions.

Cosine Similarity is widely used for semantic search for both text and images and produces better result when comparing to other similarity techniques. Its should be specified while creating the index in vector DB.

Implementation of Semantics Search

Creating index in elasticsearch DB with cosine similarity

To create an ES index, you need to specify a few fields with the type dense_vector and similarity cosine.

dense_vector is a field type stores

dense vectors of numeric values. The index should be populated by providing a list

of video metadata and their corresponding thumbnail images. This process will generate high-dimensional embedding

vectors.

The following is the sample body to be given for index creation in elastic search vector DB.

es = Elasticsearch([{'host': es_host, 'port': es_port}], http_auth=(username, password)) index_body = { "mappings": { "properties": { "content": { "type": "text" }, "text_embedding": { "type": "dense_vector", "dims": 384, // varies based on model we choose "index": True, "similarity": "cosine" }, "image_embedding": { "type": "dense_vector", "dims": 768, // varies based on model we choose "index": True, "similarity": "cosine" } } } } response = es.indices.create(index=index_name, body=index_body)

With this, we can consider the data ingestion process is completed and both text and image embeddings along with the content are indexed in the elastic search vector DB.

Sample elasticsearch query body for the KNN search

Query can be either just text , image or combination of both. In all cases. the corresponding embeddings will be generated and distance matching will be done for those embedding vectors.

To conduct an efficient search, we can utilize the K-Nearest Neighbors (KNN) algorithm. This algorithm finds the nearest neighbors based on the similarity metric defined during index creation. By specifying the desired number of neighbors(k)and setting a minimum score threshold, we can control the number of neighbors displayed and improve result accuracy.

We can limit the number of results by providing size. Also we can have combined search for text and image by providing boost . boost can increase or decrease the score by provided value.

if boost_of_image_embedding and boost_of_text_embedding is 0.5 and 0.5 respectively then the result will be list of contents having similarity of each embedding 50%.

boost_of_image_embedding + boost_of_text_embedding should be equal to 1

final score will be equal to => (boost_of_image_embedding image_score) + (boost_of_text_embedding text_score)

For only text similarity, boost_of_image_embedding should be ZERO and vise versa.

es = Elasticsearch([{'host': es_host, 'port': es_port}], http_auth=(username, password)) query_body = { "knn": [ { "field": "text_embedding", "query_vector": text_embedding, "k": 50, "num_candidates": 100, "boost": text_weight // 0.0<= x <=1.0 }, { "field": "image_embedding", "query_vector": image_embedding, "k": 50, "num_candidates": 100, "boost": image_weight // 0.0<= x <=1.0 }], "min_score": query.score, // 0.1<= x <=1.0 "size": query.max_result_size } search_result = es.search(index=query.index, body=query_body)

Results

Having done the search, lets see how to interpret the results and analysis its similarity score to figure the best matches for the query we have given.

Each data in the query will have three parts,

- Original content

- Its reference link/video link

- And the score for that particular content.

The list of results returned will be decreasing order of score. The result with max score will be the nearest one. If score is 1.0 then its the exact match.

The following are the sample responses for thumbnails and text semantics suggestions from our experiments: sample results for cosine similarities:

[ { "question": "https://i.ytimg.com/vi/fsav8UDO0uw/hqdefault.jpg", "data": [ { "content": "Title: Prince William launches campaign to end homelessness \u2013 BBC News Description:Prince William is launching a major five-year campaign to end homelessness, which he said should not exist in a \"modern and progressive society\". The Prince of Wales's charitable foundation is putting in \u00a33m of start-up funding to help make homelessness \"rare, brief and unrepeated\". Six locations across the UK will be used to test ideas to cut homelessness. Please subscribe here: http://bit.ly/1rbfUog #Royals #PrinceWilliam #BBCNews ", "reference": "https://www.youtube.com/watch?v=uS563DYnUXQ", "score": "0.9123167" }, { "content": "Title: Prince Philip: Officer, husband, father - BBC News Description:His Royal Highness Prince Philip, Duke of Edinburgh, lived most of his long life in the public eye. Born a European prince, he became the husband and vital support to one of the most famous women in the world. In the process he carved a leading role of his own in Britain and the world. ", "reference": "https://www.youtube.com/watch?v=snpRwWCVhKY", "score": "0.91107905" }, { "content": "Title: Boris Johnson faces showdown with MPs over powers to breach international law - BBC News Description:Boris Johnson is facing a showdown with MPs, including many Conservatives, when they debate controversial proposals that would allow the government to ignore key parts of its Brexit withdrawal agreement with the EU. MPs are preparing to vote on the government\u2019s plan \u2014 which would allow it to change or ignore rules agreed for the movement of goods between Britain and Northern Ireland. The government has admitted that those proposals would breach International law. That has provoked widespread criticism, with some senior Conservatives refusing to support it. However the Justice Secretary, Robert Buckland has defended the plan, calling it an emergency Brexit \u201cinsurance policy\u201d if the EU acts unreasonably during the current talks on a future trade deal. Clive Myrie presents BBC News at Ten reporting by chief political correspondent Vicki Young. Please subscribe HERE http://bit.ly/1rbfUog ", "reference": "https://www.youtube.com/watch?v=UG6KPDtnVU8", "score": "0.90740794" } { "content": "Title: UK government yet to bring in restrictions to counter Omicron threat - BBC News Description:Pressure is growing on the UK government to bring in restrictions before Christmas to counter the rising threat from the Omicron variant of the Coronavirus. The Prime Minister Boris Johnson held a two-hour meeting with his cabinet on Wednesday to talk about the spread but after the lengthy discussions, he did not reveal any new measures. A former member of the Sage scientific group which advises the UK government says the rate at which Omicron spreads is more of a threat than its severity. Sir Jeremy Farrar of the Wellcome Trust described its spread as \"unbelievably fast\" and transmission rates as \"eye-wateringly high\". Please subscribe HERE http://bit.ly/1rbfUog #BBCNews #Omicron ", "reference": "https://www.youtube.com/watch?v=PgQAWCVC0gg", "score": "0.89934075" } ] } , { "question": "Title: Ukraine war: Russia's Wagner Group boss says he will pull troops out of Bakhmut - BBC News\nDescription: The leader of Russia's Wagner Group says he will withdraw his troops from the Ukrainian city of Bakhmut by Wednesday, in a row over ammunition.\n\nHis statement came after he posted a gruesome video of him walking among dead fighters' bodies, asking defence officials for more supplies.\n\nRussia has been trying to capture the city for months, despite its questionable strategic value.\n\nYevgeny Prigozhin pinned his decision squarely on the defence ministry.\n\nPlease subscribe here: http://bit.ly/1rbfUog\n\n#Ukraine #Russia #BBCNews", "data": [ { "content": "Title: Putin vows to punish mercenaries as Wagner leader calls for rebellion against army – BBC News Description:Russian president Vladimir Putin has vowed to punish mercenaries involved in an apparent armed mutiny. Wagner group leader Yevgeny Prigozhin has called for a rebellion against the army, and while he has denied attempting a coup, it is understood the group has taken the Russian city of Rostov-on-Don near the Ukraine border. Prigozhin accused the army of launching a deadly attack on his forces in Ukraine, where Wagner troops are fighting for Russia – which Moscow has denied. Now, in recent hours, security in Russia has been tightened, with internet restricted and military trucks spotted on Moscow’s streets. Please subscribe here: http://bit.ly/1rbfUog #Russia #WagnerGroup #BBCNews", "reference": "https://www.youtube.com/watch?v=HAyiy-3hwyE", "score": "0.882637" }, { "content": "Title: Has Wagner's rebellion changed the Ukraine war for Russia? - BBC Newsnight Description:Warning: There are graphic images in this film There were reports that President Putin had to be talked out of killing his former ally - the head of the mercenary Wagner Group Yezgeny Prigozhin, and that Mr Prigozhin had expected the Russian army to change sides to support him. That's according to the Belarus leader President Lukashenko who's given asylum to Mr Prigozhin as part of the deal to end the revolt. He was speaking at a press conference yesterday. President Putin meanwhile tried to paint a picture of life returning to normal. The Russian authorities said Wagner will be disarmed but its members will escape prosecution over its short-lived rebellion. Newsnight’s Diplomatic Editor Mark Urban reports. Please subscribe HERE bit.ly/1rbfUog — Website: https://www.bbc.co.uk/newsnight Twitter: https://twitter.com/BBCNewsnight Facebook: https://www.facebook.com/bbcnewsnight #Newsnight #BBCNews", "reference": "https://www.youtube.com/watch?v=xz54uo0nXK8", "score": "0.8814683" }, { "content": "Title: Wagner mercenaries say they control key Russian city as convoy heads towards Moscow – BBC News Description:Wagner mercenaries have taken control of a Russian city key to their war effort in Ukraine, with a convoy of their troops reportedly on route to Moscow. Rostov-on-Don, near the Ukraine border, is seemingly under Wagner control, after their leader Yevgeny Prigozhin called for a rebellion against the army. Key military facilities in the city of Voronezh - which is halfway between Rostov and Moscow, are also said to be controlled by Wagner forces. Russian President Vladimir Putin earlier described their actions as 'a knife in the back of our people', and residents of the Russian capital have been told to stay at home at this time. Please subscribe here: http://bit.ly/1rbfUog #Russia #WagnerGroup #BBCNews", "reference": "https://www.youtube.com/watch?v=W25R7KkBmsM", "score": "0.86847734" } ] } ]

Overcoming Limitations of processing full length videos

The semantic search using this hybrid combination of text and image embeddings overcomes the limitations of processing full-length videos by leveraging visual content summaries. By analyzing the condensed visual representations of video content, such as thumbnails, title and description, it enables efficient processing, quick assessment, and improved relevance. This approach reduces the processing requirements and storage demands associated with full-length videos while providing users with a visual summary of the video's key elements. the search process becomes more accurate and contextually relevant. These hybrid semantic search enhances the user experience by allowing for quick browsing and informed decision-making based on visual cues, ultimately improving the overall search experience.Using this kinda approach in semantic search could be cost effective and might work for most of the cases.

Conclusion

In this blog, we explored the evolution of search strategies across different generations, focusing on video suggestions based on metadata, descriptions, and thumbnails. We then delved into the implementation of semantic search using text embeddings and image embeddings. Text embeddings were created using Sentence TransFormers, while image embeddings were generated using a Clip model developed by OpenAI.

Our aim was to provide a foundational understanding of constructing a basic semantic search system, which could potentially serve as a recommendation engine for various applications. As we move forward, it's important to remember that while our example provides a stepping stone, there is still significant room for refining and optimizing this system to fit your specific problem or use case.

We would love to hear from you! Reach us @

info@techconative.com