Google Cloud Build CI/CD pipeline performance improvement by 350% with additional 8 cents per build!

Sun Apr 16 2023

Context

Continuous Integration / Continuous Deployment pipelines allows organizations to ship software quickly and efficiently. Automated build and test stages triggered by CI ensure that code changes being merged into the repository are reliable. Then code is delivered quickly and seamlessly as a part of the CD process. Using CI/CD incremental code changes are made frequently and reliably.

As days pass on, new features are added and its related code, dependencies, tests for verification / validation also gets added, that eventually increases the pipeline build duration and it gets tough to do frequent releases/rollbacks in a day as required. With this, our software delivery becomes time consuming with a spike in cost to run these pipelines. So, definitely we need to optimize our CI/CD pipeline and in this blog, we will be sharing the solutions that worked for us, to optimize our own pipeline. (please have a look into our previous blog on building cloud native CI/CD pipelines here)

Problem

Within a couple of months, our CI/CD pipeline faced a similar kind of scenario with gcp cloud build using default machineType - e2-medium took almost an hour to complete all the stages and deliver the code to users. Even if we need to do hot patches we have to wait for nearly an hour for the pipeline to complete which is not an efficient process.

Solution

Our approach to the problem was multi-pronged; as a first step, we upgraded the default machine type offered by the cloud service i.e. e2-medium (1 vCPU 4GB Memory) to e2-highcpu-8 (8 vCPU 8GB Memory) and initiated the pipeline.

cloudbuild.yaml file

options: machineType: "E2_HIGHCPU_8"

As expected with a better machine (e2-highcpu-8), pipeline execution duration time is reduced on a whole and it also has a direct impact on the cost of executing pipeline when compared to opting for a default machine type.

Further to strike a balance between Time & Cost. We added a few more optimizations in our pipeline as detailed below.

Added kaniko cache with docker build - We added a new stage for kaniko cache

with cloud builder gcr.io/kaniko-project/executor:latest to build our application image using cache for each builds as below:

cloudbuild.yaml file

# Building Docker image with kaniko cache - id: build-application-image name: "gcr.io/kaniko-project/executor:latest" args: - --destination=gcr.io/$PROJECT_ID/application:latest - --cache=true - --cache-ttl=720h #30days to hours waitFor: ["git-submodule"]

By adding kaniko cache to our pipeline, it builds the image using cache from previous runs and by default tags the image with a given label (latest), then pushes it to the Container Registry destination. This also removes a separate stage from cloud build to tag & push docker image (saves time).

Tip: To get the most out of cache, we have modified our Dockerfile in such a way that frequently changing layers are kept at last and also for installing the node dependencies we are using npm ci. For more info on this, check here.

Parallel Test Execution - We are using WebdriverIO automation framework with functional, end to end Ui-test suites covering various user flows (200+ tests) that get executed through WebdriverIO docker service having Selenium Hub - chrome/firefox browser setup.Here, we have added SE_NODE_MAX_SESSIONS environment variable and set the value to 8 as below:

docker-compose.yaml file

chrome: image: selenium/node-chrome:4.7.1-20221208 depends_on: - selenium-hub environment: - SE_EVENT_BUS_HOST=selenium-hub - SE_EVENT_BUS_PUBLISH_PORT=4442 - SE_EVENT_BUS_SUBSCRIBE_PORT=4443 - SE_NODE_MAX_SESSIONS=8 ports: - "6900:5900"

Parallel sessions are based on the machine's available processor. Initially, we started with 2 sessions, then increased to 3/5/6 and then to 8. In our case, 8 sessions worked out and used it to run 8 test suites in chrome or firefox browser parallel.

Tip: To get the best execution time, have many individual test suites/files with minimal number of tests. For example, if you have 50 tests in a file, try to split the single file into 5 separate test files each having 10 tests.

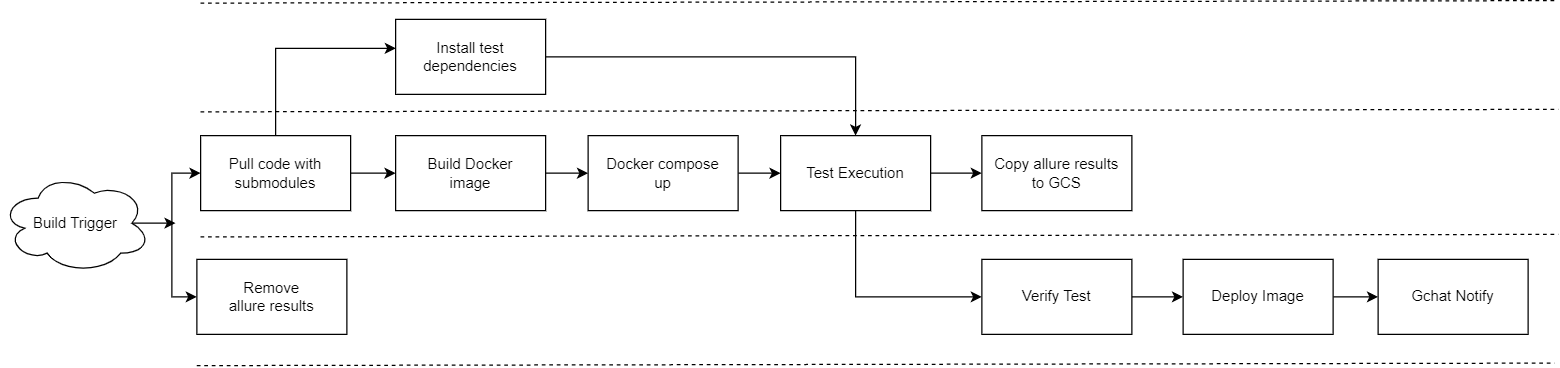

Parallel Cloud Build stages execution - Our quench to optimize the performance was still on fire and we found that we could run the build stages parallelly with the help of gcp configuration that further led to with the build time optimization. In our pipeline, we identified the below stages that can be executed parallel and added waitFor field to it.

Here, we have the summary for the solutions tried and we got our CI/CD pipeline duration from almost an hour to ~15 mins with machineType e2-highcpu-8, having Kaniko cache for docker build, execute tests in parallel and also execute cloud build steps parallel.

Time vs Cost comparison with different cloud setup in GCP

8 cents more ($0.08) saved us 40 mins of our build time ! With this setup, we were able to find a balance between Time and Cost for our pipeline.

We would love to hear from you! Reach us @

info@techconative.com